WordPress robots.txt is very important for SEO. To be sure that your site is well ranked in search engine results, you need to make its most important pages convenient for searching and indexing “robots” (“bots”) of search engines. A well-structured robots.txt file will help direct these bots to the pages you want to index.

In this article we are going to reveal such questions:

- What is a robots.txt file and why it is important

- WordPress robots txt location

- Best robots txt for WordPress

- How to create a robots.txt file

- How to check the robots.txt file and send it to the Google Search console.

What is a robots.txt file for WordPress and why it is important ?

When you create a new website, search engines will send their robots to scan and create a map of all its pages. This way, they will know which pages to show as a result when someone searches for relevant keywords. At a basic level, this is quite simple(also, check this useful post – WordPress default .htaccess file).

The problem is that modern websites contain many other elements besides pages. WordPress allows you to install, for example, plugins that often have their own directories. There isn’t any need to show this in search results, as they do not match the content.

What the robots.txt file does is provide a set of guidelines for search robots. He tells them: “Look here and index this pages, but do not enter other areas!”. This file can be as detailed as you want and it is very easy to create, even if you are beginner.

In practice, the search engines will still scan your site, even if you do not create a robots.txt file. However, not creating it is a very irrational step. Without this file, you leave robots to index all the content of your site and they decide that you need to show all parts of your site, even those that you would like to hide from public access(also, check the – Best WordPress eCommerce plugins).

A more important point, without a robots.txt file, your website will have a lot of hits from robots of your site. This will adversely affect its performance. Even if the attendance of your site is still small, the page loading speed is something that should always be in priority and at the highest level. In the end, there are only a few things that people do not like more than slowly loading websites.

WordPress robots txt location

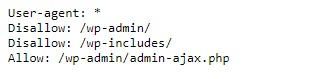

When you create a WordPress website, a robots.txt file is automatically created and located in your main directory on the server. For example, if your site is located here – wpdevart.com, you can find it at wpdevart.com/robots.txt and see something like this:

This is an example of the simplest robots.txt file. Translating into human-readable language, the right-hand side after the User-agent: declares

for which robots the rules are. An asterisk means that the rule is universal and applies to all robots . In this case, the file tells robots that they can’t scan the wp-admin and wp-includes directories. The meaning of these rules is that these directories contain a lot of files that require protection from public access(also, check our WordPress Countdown plugin).

Of course, you can add more rules to your file. Before you do this, you need to understand that this is a virtual file. Usually, WordPress robots.txt is located in the root directory, which is often called public_html or www (or by the name of your webiste):

It should be noted that the robots.txt file for WordPress, created by default, is not accessible to you from any directory. It works, but if you want to make changes, you need to create your own file and upload it to the root directory.

We will look at several ways to create a robots.txt file for WordPress. Now let’s discuss how to determine which rules to include in the file.

Best robots txt for WordPress

It is not so difficult to crate the best robots txt for your WordPress website. So what rules need to be included in the robots.txt file. In the previous section, we saw an example of a robots.txt file generated by WordPress. It includes only two short rules, but for most sites they are enough. Let’s take a look at two different robots.txt files and see what each one does.

Here is our first example of a WordPress robots.txt file:

User-agent: *

Allow: /

# Disallowed Sub-Directories

Disallow: / payout /

Disallow: / photos /

Disallow: / forums /This robots.txt file is created for the forum. Search engines usually index every forum thread. Depending on the topic of your forum, you may want to disallow indexing. For example, Google will not index hundreds of short user discussions. You can also set rules that point to a specific forum thread in order to exclude it, and allow search engines to index the rest.

You also notice a line that starts with Allow: / at the top of the file. This line tells robots that they can scan all pages of your site, except for the restrictions set below. You also noticed that we set these rules to be universal (with an asterisk), as it was in the virtual WordPress robots.txt file(also, you can check our WordPress Pricing table plugin).

Let’s check out another sample WordPress robots.txt file:

User-agent: *

Disallow: / wp-admin /

Disallow: / wp-includes /

User-agent: Bingbot

Disallow: /In this file we set the same rules that go to WordPress by default. Although we are also adding a new set of rules that block Bing search robots from crawling our site. Bingbot, as you can see, is the name of the robot.

You can type other search engines names to restrict / allow their access as well. In practice, of course, Bingbot is very good (even if not as good as Googlebot). However, there are many malicious robots.

The bad news is that they do not always follow the instructions in the robots.txt file (they still work like terrorists). It should be borne in mind that, although most robots will use the instructions provided in this file, you cannot force them to do so.

If you go deeper into the topic, you will find many suggestions on what to allow and what to block on your WordPress site. Although, from our experience, fewer rules are often better. Here is an example of the

best robots txt for WordPress website, but for different website it can be different:

User-agent: *

Disallow: /cgi-bin

Disallow: /?

Disallow: /wp-

Disallow: /wp/

Disallow: *?s=

Disallow: *&s=

Disallow: /search/

Disallow: /author/

Disallow: /users/

Disallow: */trackback

Disallow: */feed

Disallow: */rss

Disallow: */embed

Disallow: */wlwmanifest.xml

Disallow: /xmlrpc.php

Disallow: *utm*=

Disallow: *openstat=

Allow: */uploads

Sitemap: https://wpdevart.com/sitemap.xmlTraditionally WordPress likes to close wp-admin and wp-includes directories. However, this is no longer the best solution. Plus, if you add meta tags for your images for the purpose of promotion (SEO), there is no point in telling robots not to index the contents of these directories.

What should contain your robots.txt file will depend on needs of your site. So feel free to do more research!

How to create a robots.txt

What could be simpler than creating a text file(txt). All you have to do is open your favorite editor (like Notepad or TextEdit) and enter a few lines. Then you save the file using robots and txt extension(robots.txt). It will take a few seconds, so you may want to create a robots.txt for WordPress without using a plugin.

We saved this file locally on the computer. Once you have made your own file you need to connect to your site via FTP(maybe with FileZilla).

After connecting to your site, go to the public_html directory. Now, all you need to do is upload the robots.txt file from your computer to the server. You can do this either by clicking the right mouse button on the file in the local FTP navigator or simply by dragging it using your mouse.

It only takes a few seconds. As you can see, this method is easier than using the plugin.

How to check WordPress robots.txt and send it to the Google Search Console

After your WordPress robots.txt file has been created and uploaded, you can check for errors in the Google Search Console. Search Console is a set of Google tools designed to help you keep track of how your content appears in search results. One of these tools checks robots.txt, you will easily find it on Google webmaster tools admin page(also, check The 50 Best WordPress Plugins 2020).

There you will find the editor field where you can add the code for your WordPress robots.txt file, and click Submit in the bottom right corner. The Google Search console will ask you whether you want to use the new code or download a file from your website.

Now the platform will check your file for errors. If an error is found, information about this will be shown to you. You saw a few examples of the WordPress robots.txt file, and now you have even more chances to create your perfect robots.txt file !